Alright there is a ton of information and blog posts that revolve around migrating to vSphere Distributed Switches and they are all great! I hate to throw yet another one out there but as I've stated before things tend to sink in when I post them here. Call it selfish if you want – I'm just going to call it studying Object 2.1 in the blueprint 🙂

Before we get to involved in the details I'll go through a few key pieces of information. As you can see below, there are a lost of port groups that I will need to migrate. These are in fact the port groups that are setup by default with AutoLab. Also, I'm assuming you have redundant NICs setup on all your vSwitches. This will allow us to migrate all of our VM networks and port groups without incurring any downtime. As stated before, there are many blog posts around this subject and many different ways to do the migration. This is just the way I've done it in the past and I'm sure you can probably do this in a smaller amount of steps but this is just the process I've followed.

Step 1 – Create the shell

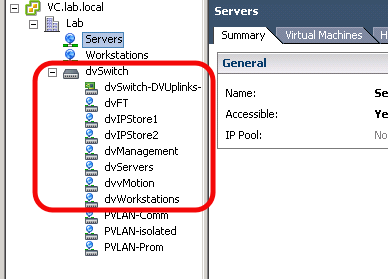

So the first step is to create our distributed switch. This is pretty simple! Just head into your network view and select 'New vSphere Distributed Switch', Follow the wizard, it's not that hard. Pay attention to the number of uplinks you allow as you need to be sure that you have as many uplinks in your distributed switch as you have physical adapters assigned to your standard switches. Also, I usually add my hosts into the distributed switch during this process, just not importing and physical NICs. Basically we're left with a distributed switch containing our hosts with no uplinks assigned. Once we have our switch we need to duplicate all of the por tgroups we wish to migrate (Management, vMotion, FT, VM, etc.) If you are following along with the autolab you should end up with something similar to the following (ignore my PVLAN port groups – that's another blog post).

One note about the uplinks that you can't see in the image above. I've went into each of my port groups and setup the teaming/failover to mimic that of the standard switches. So, for the port groups that were assigned to vSwitch0, i've set dvUplink1 and 2 as active, and 3/4 as unused. For those in vSwitch1, 3/4 are active and 1/2 are unused. This provides us with the same connectivity as the standard switches and allows us to segregate the traffic the exact same way that the standard switches did. This can by editing the settings of you port group and modifying the Teaming and Failover section. See below.

One note about the uplinks that you can't see in the image above. I've went into each of my port groups and setup the teaming/failover to mimic that of the standard switches. So, for the port groups that were assigned to vSwitch0, i've set dvUplink1 and 2 as active, and 3/4 as unused. For those in vSwitch1, 3/4 are active and 1/2 are unused. This provides us with the same connectivity as the standard switches and allows us to segregate the traffic the exact same way that the standard switches did. This can by editing the settings of you port group and modifying the Teaming and Failover section. See below.

Step 2 – Split up your NICs

Alright! Now that we have a shell of a distributed switch configured we can begin the migration process. This is the process I was mentioning at the beginning of the post that can be performed a million and one ways! This is how I like to do it. From the hosts networking configuration page, be sure you have switched to the vSphere Distributed Switch context. The first thing we will do is assign a physical adapter from every vSwitch on each host to an uplink on our dvSwitch. Now, we have redundant NICs on both vSwitches so we are able to do this without affecting our connectivity (hopefully). To do this, select the 'Manage Physical Adapters' link in the top right hand corner of the screen. This will display our uplinks with the ability to add NICs to each one.

Basically, we want to add vmnic0 to dvUplink1 and vmnic2 to dvUplink3. This is because we want one NIC from each standard switch into each of the active/unused configurations that we have setup previously. It's hard to explain, but once you start doing it you should understand. To do this, just click the 'Click to Add NIC' links on dvUplink1 and 3 and assign the proper NICs. You will get a warning letting you know that you are removing a NIC from one switch and adding it to another.

Basically, we want to add vmnic0 to dvUplink1 and vmnic2 to dvUplink3. This is because we want one NIC from each standard switch into each of the active/unused configurations that we have setup previously. It's hard to explain, but once you start doing it you should understand. To do this, just click the 'Click to Add NIC' links on dvUplink1 and 3 and assign the proper NICs. You will get a warning letting you know that you are removing a NIC from one switch and adding it to another.

Be sure you repeat the NIC additions on each host you have, paying close attention to the uplinks you are assigning them to.

Be sure you repeat the NIC additions on each host you have, paying close attention to the uplinks you are assigning them to.

Step 3 – Migrate our vmkernel port groups

Once we have a couple of NICs assigned to our dvSwitch we can now begin to migrate our vmkernel interfaces. To do this task, switch to the networking inventory view, right click on our dvSwitch and select 'Manage Hosts'. Select the hosts we want to migrate from (usually all of them in the cluster). The NICs that we just added should already be selected the in 'Select Physical Adapters' dialog. Leave this as default, we will come back and grab the other NICs once we have successfully moved our vmkernel interfaces and virtual machine networking, it's the next screen, the 'Network Connectivity' dialog which we will perform most the work. This is where we say what source port group should be migrated to what destination port group. An easy step, simply adjusting all of the dropdowns beside each port group does the trick. See below. When your done, skip the VM Networking for now and click 'Finish'.

After a little bit of time we should now have all of our vmkernel interfaces migrated to our distributed switch. This can be confirmed by looking at our standard switches and ensuring we see no vmkernel interfaces What you might still see though is VMs attached to Virtual Machine port groups on the standard switches. This is what we will move next.

After a little bit of time we should now have all of our vmkernel interfaces migrated to our distributed switch. This can be confirmed by looking at our standard switches and ensuring we see no vmkernel interfaces What you might still see though is VMs attached to Virtual Machine port groups on the standard switches. This is what we will move next.

Step 4 – Move your virtual machine port groups

Again, this is done through the Networking Inventory and is very simple. Right-click your dvSwitch and select 'Migrate Virtual Machine Networking'. Set the VM network you wish to migrate as your source, and the one you created for it in your dvSwtich as your destination (see below). When you click next you will be presented with a list of VMs on that network, and whether or not the destination network is accessible or not. If we have done everything right up to this point it should be. Select all your VMs and complete the migration wizard.

This process will have to be done for each and every virtual machine port group you wish to migrate. In the case of autolab, Servers and Workstations. Once we are done this we have successfully migrated all of our port groups to our distributed switch.

This process will have to be done for each and every virtual machine port group you wish to migrate. In the case of autolab, Servers and Workstations. Once we are done this we have successfully migrated all of our port groups to our distributed switch.

Step 5 – Pick up the trash!

The only thing left to do at this point would be to go back to the hosts' view of the distributed switch, select 'Manage Physical Adapters' and assign the remaining two NICs from our standard switches to the proper uplinks in our dvSwitch.

Step 6 – Celebrate a VCAP pass

And done! This seems like a good thing to have someone do on the exam! That said, if so, take caution. The last thing you want to do is mess up a Management Network on one of your hosts and lose contact to it! Yikes!